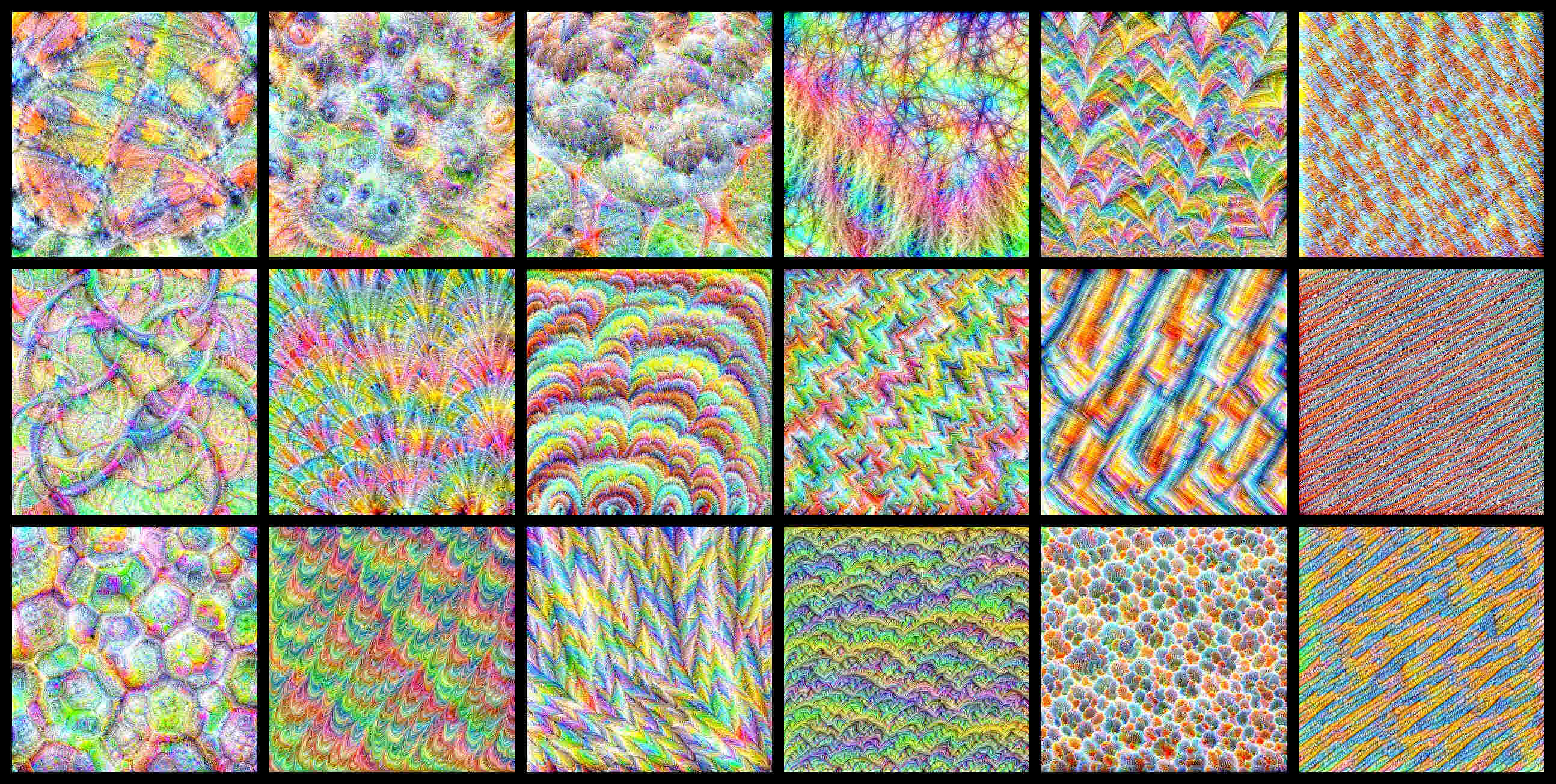

Neural networks are usually seen as black box due to their ability to learn the features in space in such high dimension that those might not be comprehensible to humans. However this lack of transparency of network could make it difficult to assert if network has learn close to correct features. In case of Convolutional neural networks, we can visualize the image that maximizes the activation of given layer in the network, giving us ideas to what network learns. For example, if there was layer in network with cat ear looking like feature, we would expect an image of cat as input to the network lighting up that particular layer. We reverse-engineeer this and generate an input image that maximizes the activation of the given layer, this helps us visualize what kind of feature that particular layer looks for!